12 Practical Examples Of wget Command In Linux

Table of Contents

Wget command is a useful GNU command line utility used to download files from the internet. This utility can download the files from servers using popular protocols like HTTP, HTTPS, and FTP. It runs in the background (non-interactive) and hence can be used in scripts and cron jobs. GNU Wget was written by Hrvoje Nikšić and currently, it is under Tim Rühsen, Darshit Shah, and Giuseppe Scrivano.

In this article let us look at 12 useful examples of using wget utility.

wget Command Examples

Downloading a file from web

$ wget <URL>

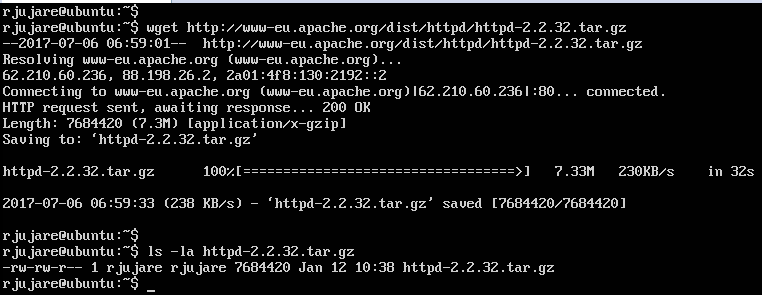

This command will download the specified file in the URL to the current directory. The below screenshot captures downloading of Apache HTTP server source code (compressed file) from the URL: http://www-eu.apache.org/dist/httpd/httpd-2.2.32.tar.gz.

wget output contains the following details:

- The name of the file being downloaded

- A progress bar showing percentage downloaded

- The size of the file that has been downloaded

- Current download speed

- The estimated time to complete the download

Downloading a file with specified filename

To specify a different filename the -O option (uppercase O) is used.

$ wget <URL> -O <file_name>

Silent download

To make a silent download, “–q” option is used as follows –

$ wget –q <URL>

Resuming partially downloaded file

In order to resume the partially downloaded file, the “–c” option is used as follows –

$ wget –c <URL>

Downloading files in background

With “–b” option, wget start the downloading in the background and start writing -

$ wget –b <URL>

Multiple downloads

For this “-i” option followed by a file containing multiple URLs (one URL per line) can be used. wget will go through each URL and download them all. How simple is that? :-).

$ wget –i <file_name> <URL>

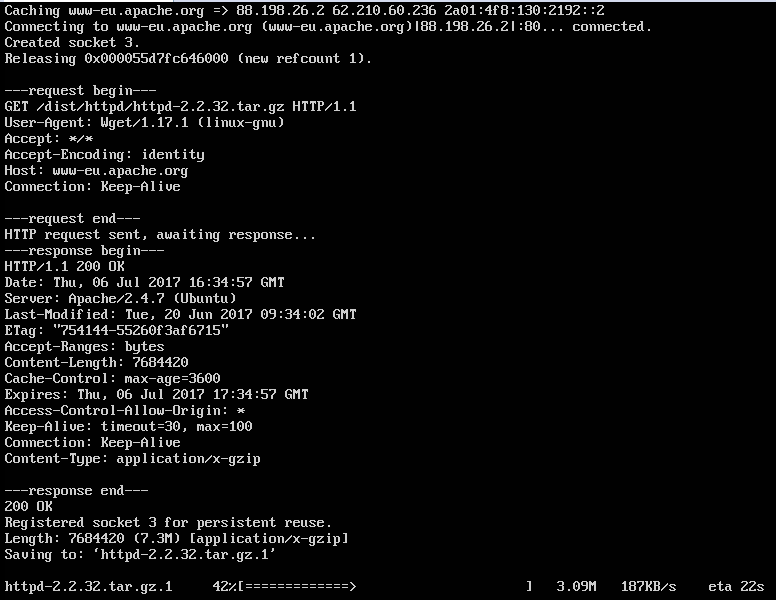

Enable debug information

With “–d” option, more detailed information can be obtained which may be useful when troubleshooting a problem.

Downloading a file from untrusted URL

It is possible to bypass the verification of the SSL/TLS certificate by using the option: “–no-check-certificate”.

$ wget <URL> --no-check-certificate

Downloading file from password protected sites

For both FTP and HTTP connections, below command options can be used to pass on the user credentials:

$ wget --user=<user_id> --password=<user_password> <URL>

However, these parameters can be overridden using the “–ftp-user” and “–ftp-password” options for FTP connections and the “–http-user” and “–HTTP-password” options for HTTP connections.

For FTP connections:

$ wget -–ftp-user=<user_id> --ftp-password=<user_password> <URL>

For HTTP connections:

$ wget -–http-user=<user_id> --http-password=<user_password> <URL>

As specifying password on command prompt is not recommended, use of “–ask-password” option is recommended which will prompt for the password, keeping it out of history log.

$ wget -–ftp-user=<user_id> --ask-password <FTP_URL> $ wget –-http_user=<user_id> --ask-password <HTTP_URL>

Redirecting wget log to a file

Using “-o” option (lower case “o”), one can redirect the wget command logs to a log file.

$ wget –o <log_file> <URL>

Downloading a full website

One of the good features of wget command is mirroring. With this feature, entire website can be downloaded. Using “ -m” option it is possible to download an entire website from the web.

$ wget –m <URL>

Specifying download speed limits

Using “—limit-rate” option, the download limit can be restricted. The download limit can be expressed in bytes, kilobytes (with the k suffix), or megabytes (with the m suffix).

$ wget –-limit-rate=<user_rate> <URL>

For example, to restrict the speed up to 200-kilo bytes –

$ wget –-limit-rate=200k http://www-eu.apache.org/dist/httpd/httpd-2.2.32.tar.gz

Conclusion

wget command has advanced features available which make it a very powerful command. It performs well over slow or unstable network connections. In case a download is incomplete due to a network problem, wget will automatically try to continue the download from where it left off. It can download files larger than 2GB on a 32-bit system. GWget is a GUI utility for wget. So give it a try if you’re not a fan of CLI.

Ramakrishna Jujare

LinuxAndUbuntu Newsletter

Join the newsletter to receive the latest updates in your inbox.